What is Deep Learning(DL)? : Simple Detailed Introduction for Dummies

Lately, most of us have heard the buzzwords like Deep Learning, Machine Learning, AI, and Data Science in almost every tech conversation. But sadly, most of us are not actually clear about its meaning and when to use it in what context? In this article, we will demystify the jargon, Deep Learning.

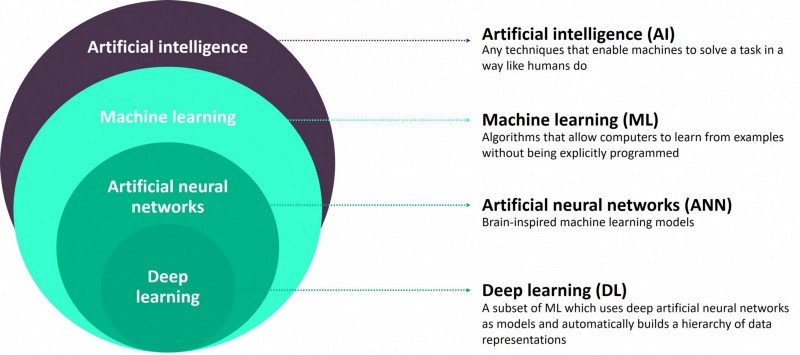

Basically, Deep learning is a subdomain of Machine Learning, which is a subdomain of Artificial intelligence.

A Quick Recap of AI vs ML vs DL

Artificial intelligence aims to make the machine smart enough to perform specific tasks without any human intervention. The goal of the machine can be playing a video game, performing surgery, etc. Each of these tasks has many subtasks, which we want to achieve with the help of Machine Learning.

Machine Learning is part of AI where it uses a fundamental mathematical algorithm to solve a particular problem where the data is structured enough to find some pattern that can help us generalize the data at hand.

Deep Learning is part of Machine Learning to find better patterns but when the data is unstructured, it is difficult to find the pattern by ML algorithms. Basically, it emulates the way humans gain certain types of knowledge.

Brief History of Deep Learning

Deep learning is not a new concept. Its foundations can be dated back to the 1980s when a computer model based created on the neural networks of the human brain. They used a combination of algorithms and mathematics called “threshold logic” to mimic the thought process. And believe it or not, backpropagation, the essential part of a neural network’s training, was invented in 1969 by Bryson and Ho but was more or less ignored until the mid-1980s.

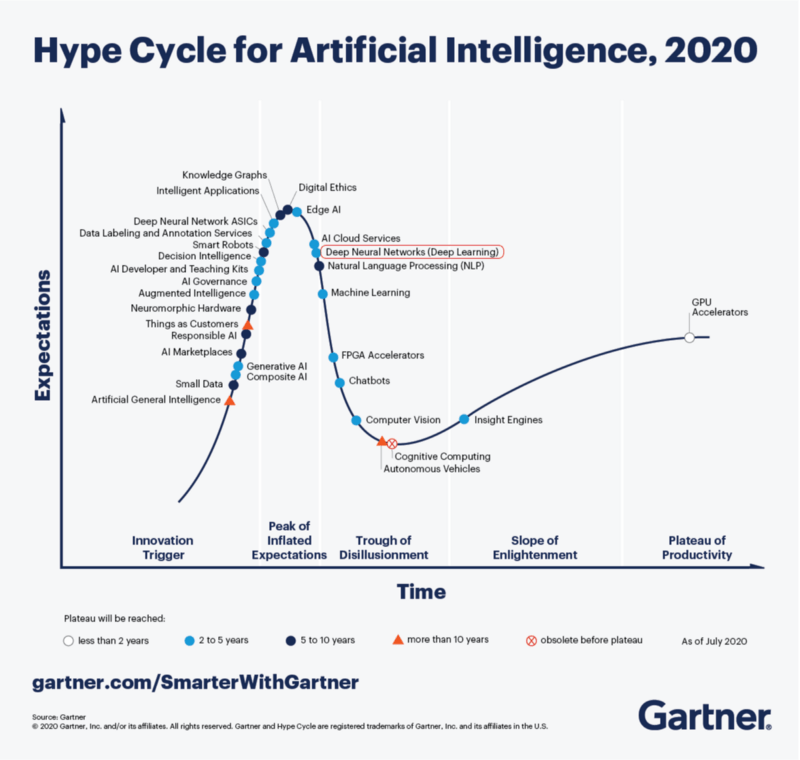

Deep Learning only gained so much traction now because we finally have enough computation power and processing powers to find something meaningful in the data. Earlier, we didn’t have either. Think back to 10 years ago when Cybercafes were a thing, and we had to pay to use a random computer. Now we have loads and loads of computation power on the cloud.

How Does Deep Learning(Neural Network) Work?

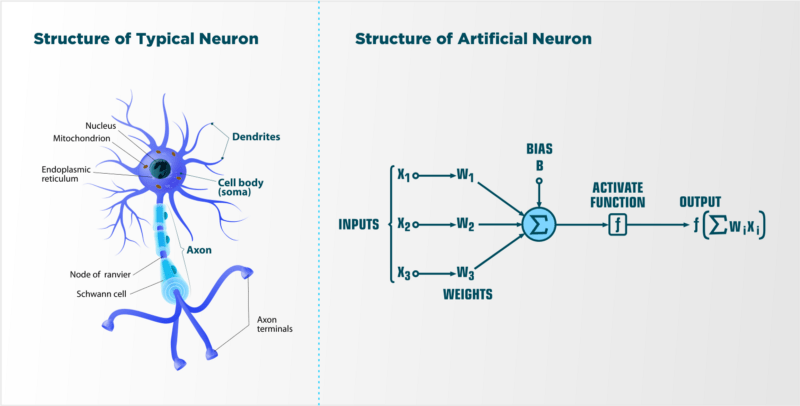

Neural networks are layers of nodes, much like the human brain is made up of neurons. Nodes within individual layers are connected to adjacent layers. A single neuron in the human brain receives thousands of signals from other neurons. An Artificial Neural Network(ANN), consists of Input neurons(Layer), Hidden neurons, and Output neurons (s). The hidden and Output layers use a function to produce output from the previous layer which is called the Activation function(ƒ).

Initially, the network will be meaningless. It gets trained based on data we passed to the neural network. As the data progresses between the nodes from input to output it gets multiplied by weight and bias gets added before it goes through the activation function. The whole process is called Feed Forward. A heavier weighted node will exert more effect on the next layer of nodes. The final layer compiles the weighted inputs to produce an output. The output layer intends to predict based on input data.

The value of weight and bias is important because it directly impact the learning of neural networks.

When the neural network is learning(actually, it is not learning yet), initially it won’t do a good job at predicting the correct output. As the whole training is to get the right value of weight and bias of each node so that the neural network(NN) generalizes well. So actual learning happens when NN has to correct the value of weight and bias of each node.

The error(difference between actual and predicted) is passed backward, cascading to the input layer. This cascade changes the “weight and bias” of each node in each layer. The entire process of cascading backward is called backpropagation, and this is how a neural network learns.

Ideally, the deeper the network(more hidden layer) the better it learns the features of the data but it also increases the computational complexity. Data passes through several “layers” of neural network algorithms while each layer passes a simplified representation of the data to the next layer. Early layers learn how to detect low-level features like shapes, and subsequent layers combine features from earlier layers into a more holistic representation. For example, while classifying fruit images layers can learn different features of the object like texture, color, shape, size, etc

Why is Deep Learning Important?

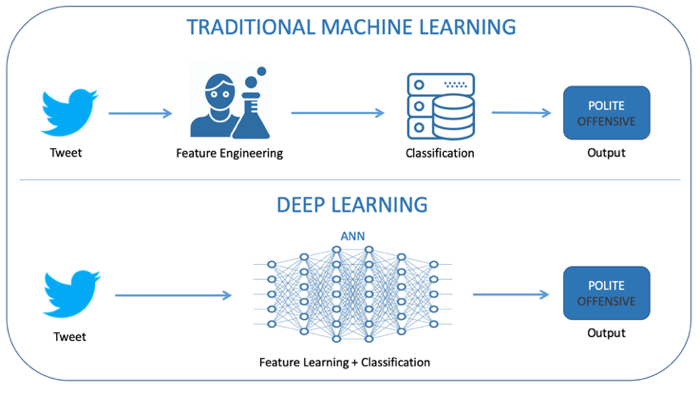

Basically, algorithms learn from the features. As ML algorithms can’t make out features by themselves, hence it will be difficult when data is complex and unstructured like voice, video, image, or text. Deep Learning is better because its ability to execute feature engineering by itself and process large numbers of features makes it very powerful and accurate at prediction when dealing with unstructured data. It in turn promotes faster learning without being told to do so explicitly by domain experts whose fundamental job was to do feature engineering for ML algorithms to improve the accuracy. It has gained popularity recently because of the amount of unstructured data we are generating every second and its capability to solve real-world problems with big data.

Another reason that makes it ideal for the solution is its Transfer learning and Reinforcement learning capabilities.

When to use(not to use) Deep Learning?

Deep Learning really shines when the data is unstructured and when feature engineering is difficult. Hence it needs huge data(dataset with hundreds of thousands or better millions of data points) to learn(or train) from. Huge data and complex DL algorithms demand high-end computational resources.

However, deep learning algorithms can be overkill if the data is too simple or incomplete, it is very easy for a deep learning model to become overfitted and fail to generalize well to new data. Also, for many business cases when interpretability is important to go with the ML algorithm as it is the best choice because of its simplicity and explainability.

Challenges in Deep Learning

Deep Learning algorithms are Data and Computational hungry as they need huge labeled data to learn from, hence huge computation power to train them. Because DL models learn the features of the data by themselves it becomes hard to interpret(or explain) the output. Also, DL models can be fooled or overfitted easily if data is less or not properly tagged.

Deep Learning Frameworks

Tensorflow

TensorFlow is inarguably one of the most popular deep learning frameworks developed by the Google Brain team.

Pytorch/Torch

PyTorch is the fastest-growing open-source Python library for deep learning developed and maintained by Facebook.

Deeplearning4j

The j in Deeplearning4j stands for Java. It is a deep learning library for the Java Virtual Machine (JVM). It is developed in Java and supports other JVM languages like Scala, Clojure, and Kotlin.

The Microsoft Cognitive Toolkit

The Microsoft Cognitive Toolkit (earlier known as CNTK) is an open-source toolkit for commercial-grade distributed deep learning.

Keras

Keras is a deep learning API written in Python, running on top of the machine learning platform TensorFlow. It was developed with a focus on enabling fast experimentation. Being able to go from idea to result as fast as possible is key to doing good research.

ONNX

ONNX or the Open Neural Network Exchange was developed as an open-source deep-learning ecosystem. Developed by Microsoft and Facebook, ONNX proves to be a deep learning framework that enables developers to switch easily between platforms.

CAFFE

Caffe is a deep learning framework made with expression, speed, and modularity in mind. It is developed by Berkeley AI Research (BAIR) and by community contributors.

Deep Learning Playground

Deep Learning Playground will be helpful for the newbie in this domain.

Deep learning does not have to be intimidating. It just has many complicated words that, when explained in simple terms, mean something you already know and can relate to pretty quickly. Hopefully, this article will ignite a curiosity inside you to learn more about this beautiful field and contribute to it.

If you like what we do and want to know more about our community 👥 then please consider sharing, following, and joining it. It is completely FREE.

Also, don’t forget to show your love ❤️ by clapping 👏 for this article and let us know your views 💬 in the comment.

Join here: https://blogs.colearninglounge.com/join-us